by J. Laurie Snell

Student days

Snell: How did you get interested in mathematics?

Doob: I have always wanted to understand what I was doing and why I was doing it, and I have often been a pest because I have objected when what I heard or read was not to be taken literally. The boy who noticed that the emperor wasn’t dressed and objected loudly has always been my model. Mathematics seemed to match my psychology, a mistake reflecting the fact that somehow I did not take into account that mathematics is created by humans.

I made a crystal radio set in grammar school, became more and more interested in radio as I moved into high school, learned Morse code and built and operated a radio transmitter after passing the licensing examination. Thus it was natural that I thought I would major in mathematical physics in college. On the other hand, although I did very well in the first-year college physics course, it left me uneasy because I never felt I knew what was going on, even though I could solve all the assigned problems. The final blow came when I registered in a course in electricity, endured several class sessions full of pictures of diesel electric locomotives and decided that if this was physics I was deserting the subject, and I transferred to a mathematics course. This decision demonstrated that I had no idea of how to choose courses and was too much a loner to think of asking for advice. At any rate the result was that in the first semester of my sophomore year I was registered in three mathematics courses.

Snell: That college was Harvard. Harvard has always had a great mathematics department but today Harvard is even involved in the calculus reform. I assume that was not the case in your day. What was Harvard like when you were a student?

Doob: I knew nothing about college education or colleges, but applied to Harvard on the advice of my high school principal, and was accepted without examination because I was in the upper seventh of my high school class. The Harvard mathematics department was one of the best research departments in the country when I arrived in September of 1926, but the Harvard mathematics curriculum was extraordinarily slow paced. Freshman math was a semester of analytic geometry followed by a semester of calculus. Sophomore calculus treated differential calculus and a smattering of integration. Junior calculus introduced partial derivatives, integration of rational functions, and multiple integrals. In these three years there were a few remarks about limits but no epsilons. The first senior-graduate analysis course was Analytic Functions and introduced epsilon-delta methods. The text was Osgood’s Funktionentheorie and many students had to learn German, epsilon-delta methods and complex function theory at the same time. In those days there were very few advanced mathematics books in English, and those in French and German were too expensive for most students. My freshman calculus section, taught by a graduate student, was the only mathematics course I ever attended that gave me positive enjoyment. The applications of derivation thrilled me.

William Fogg Osgood I did not suspect that he was an internationally famous mathematician, and of course I had no idea of mathematical research, publication in research journals or what it took to be a university professor. Osgood was a large, bearded portly gentleman who took life and mathematics very seriously and walked up and down in front of the blackboard making ponderous statements. After a few weeks of his class I appealed to my adviser, Marshall Stone, to get me into a calculus section with a more lively teacher. Of course Stone did not waste sympathy on a student who complained that a teacher got on his nerves, and he advised me that if I found sophomore calculus too slow I should take junior calculus at the same time!

That would put me in the junior course after having missed its first weeks and without the background of most of the sophomore course, but the Harvard pace was so slow that the suggestion was not absurd. I stayed and suffered in the sophomore course and simultaneously sat in on the junior course. When midterm exams were given I was still completely lost in the junior course but caught up during Christmas vacation. Through the fortunate accident of having a tedious instructor I had gained a year! The analytic function course, taken in my junior year with Osgood as teacher, was my first course in rigorous analysis and I took to it right away in spite of his mannerisms.

Snell: You also did your graduate work at Harvard. What was that like?

Doob: When I graduated in 1930 and it was time to think about a Ph.D. degree, I asked Stone to be my adviser. He told me he had no problems for me, that I should go to J. L. Walsh, who always had many problems. Walsh accepted me and we had a fine relationship: he never bothered me, and conversely. Harvard suited my character in that there was so little supervision that I could neglect classes for a considerable time while cultivating a side interest, sometimes mathematical sometimes not. Moreover there was a mathematics reading room in the library building, containing a collection independent of the main mathematics collection in the stacks. This room was an ideal base for a mathematics student who wanted to get an idea of what math was all about. Even the fact that the Harvard library was then badly run had its advantages. I soon found out that if I requested a book at the main desk, the book would frequently not be found, but that one could always find interesting books by wandering around in the stacks. The defects of the library advanced my general education.

Wladimir Seidel was a young Ph.D. at Harvard when I was there. We discussed a lemma he needed for a paper he was writing on the cluster values of an analytic function at a boundary point of its disk domain of definition. If we had known more about the Poisson integral, we would have realized that the problem was trivial. I worked out a complicated iterative geometric procedure to solve the problem, and he thanked me in a footnote, the first published reference to me. This episode is a fine example of the value of ignorance. If I had known more about the Poisson integral, I would have pointed out the proof of his lemma to Seidel and nothing more would have come of it. As it was, the lemma made me think about the relation between analytic functions and their limit values at the boundaries of their domains, and led to my doctor’s thesis.

When I finished what I hoped was my thesis I showed it to Walsh, who did not read it but asked Seidel, who had not read it, what he thought about it. Seidel said it was fine and that was that; the thesis was approved and I got my doctor’s degree in 1932. Getting a Ph.D. in two years left me woefully ignorant of almost everything in mathematics not connected with my thesis work. I had missed fertile contact with Birkhoff, Kellogg and Morse, all three at Harvard and leaders in their fields. But I had benefited from Stone by way of typing for pay, and incidentally reading, most of his Linear Transformations in Hilbert Space, from which I learned much that was useful to me in later research.

Another useful thing I learned was editorial technique. I sent part of my thesis to the AMS Transactions. The Editor (J. D. Tamarkin) wrote me that he had read the first section of my paper, that it was OK and that he had not read the second section but it was too long. Since I did my own typing the solution was simple: I retyped the paper with smaller margins and each time I went from one line to the next I turned the roller back a bit to decrease the double line spacing. (Word processors, which would have simplified such an operation, unfortunately had not yet been invented.) The new version was accepted with no further objection. Tamarkin’s editorial report had not been a complete surprise to me because I had written a “minor thesis” for Harvard, a nonresearch Ph.D. requirement, which was accepted before I noticed that I had somehow omitted turning in one of the middle pages. Professor J. L. Coolidge read the thesis carefully enough to notice that his name was referred to but not carefully enough to notice that there was a skipped page number. A friendly young professor, H. W. Brinkmann, later secretly inserted the missing page for me.

From complex variables to probability

Snell: How did you find your way from complex variables theory to probability theory?

Doob: One summer in my graduate student years I sat in on a course in aesthetic measure, given by Birkhoff. He had developed a formula which gave a numerical value to works of art. Birkhoff was a first-rate mathematician, but it was never clear whether what would get high numbers was what he liked or what selected individuals liked, and, if the latter, what sort of individuals were selected. One bohemian type student frustrated him by preferring irregular to regular designs, and in despair he told her that she was exceptional. In my youthful brashness I kept challenging him on the absence of definitions and he finally came to class one day, carefully focused his eyes on the ceiling and said that those not registered in the class really had no right to attend. I took the hint and attended no further classes. But Birkhoff bore me no grudge, and when I was wondering what to do after getting my Ph.D. he said I should go on the research circuit. It was obviously through his influence that I was given a National Research Council Fellowship for two years, to work at Columbia University with J. F. Ritt. Ritt was a good mathematician, but his work was not in my field. I had chosen Columbia University because my wife was a medical student in New York.

Ritt and I published a joint paper, to which I had made two contributions: (1) I typed it (he had married a professional typist who agreed to type his mathematics if he would get an office typewriter instead of his portable, a concession he refused to make); (2) I contributed the adjective “lexicographical” to an order he had devised.

I have a poor memory, and cultural reading of mathematics has never been of use to me. I have been a reviewer for Mathematical Reviews and a referee for various journals, but the papers I read in carrying out these duties were immediately forgotten. This memory lack meant that my mathematical background has been quite superficial, restricted to the context of my own research. Paul Lévy once told me that reading other writers’ mathematics gave him physical pain. I was not so sensitive, but reading did me no good unless I was carrying on research related to the reading, and even then it took me a long time to get the material in a form I could understand and remember. Because of these characteristics I have never been able to accomplish anything mathematically when I did not have a definite program. In New York, aside from exploiting further the ideas of my Ph.D. thesis I was wasting my time. I decided that if I was to go further in complex variable theory I would have to get into topology, and for some reason I was reluctant to do this. Furthermore I was demoralized by the deep depression. The streets were full of unemployed asking for handouts or selling apples to make a make a few cents, and no jobs were opening up either in industry or academia. After two years in New York I still had no job prospects, even though I humiliated myself trotting after big shots at AMS meetings. B. O. Koopman at Columbia told me that I should approach Harold Hotelling, Professor of Statistics there, that there was money in probability and statistics. Hotelling said he could get me a Carnegie Corporation grant to work with him, and thus the force of economic circumstances got me into probability.

Snell: Of course, there were not standard books on probability theory in those days. How did you go about learning probability?

Doob: Poincaré wrote in 1912 that one could scarcely give a satisfactory definition of probability. One cannot tell whether he was thinking of mathematical or nonmathematical contexts or whether he distinguished between them. The distinction is frequently ignored even now. It was not clear in the early 1930s that there could be a mathematical counterpart, at the same level as the rest of mathematics, of the nonmathematical context adorned with the name “probability.” In 1935, Egon Pearson told me that probability was so linked with statistics that, although it was possible to teach probability separately, such a project would just be a tour de force.

I became so rigidly intolerant of the current loose language that I ignored the textbooks, and I understood the interpretation of the Birkhoff ergodic theorem as the strong law of large numbers for a stationary sequence of random variables before I knew the Chebyshev inequality proof of the weak law of large numbers for a sequence of mutually independent identically distributed random variables! On the other hand Koopman, who showed me that proof and who was a pioneer in ergodic theory, did not realize that the ergodic theorem had anything to do with probability until Norbert Wiener and I told him the connection at an American Mathematical Society meeting.

Kolmogorov’s 1933 monograph on the foundations of (mathematical) probability appeared just when I was desperately trying to find out what the subject was all about. He gave measure theoretic definitions of probability, of random variables and their expectations and of conditional expectations. He also constructed probability measures in infinite-dimensional coordinate spaces. Kolmogorov did not state that the set of coordinate variables of such a space constitutes a model for a collection of random variables with given compatible joint distributions, and I am ashamed to say that I completely missed the point of that section of his monograph, only realizing it after I had constructed some infinite-dimensional product measures in the course of my own research. Kolmogorov defined a random variable as a measurable function on a probability measure space, but there is a wide gap between accepting a definition and taking it seriously. It was a shock for probabilists to realize that a function is gloried into a random variable as soon as its domain is assigned a probability distribution with respect to which the function is measurable. In a 1934 class discussion of bivariate normal distributions Hotelling remarked that zero correlation of two jointly normally distributed random variables implied independence, but it was not known whether the random variables of an uncorrelated pair were necessarily independent. Of course he understood me at once when I remarked after class that the interval \( [0,2\pi] \) when endowed with Lebesgue measure divided by \( 2\pi \) is a probability measure space, and that on this space the sine and cosine functions are uncorrelated but not independent random variables. He had not digested the idea that a trigonometric function is a random variable relative to any Borel probability measure on its domain. The fact that nonprobabilists commonly denote functions by \( f \), \( g \) and so on, whereas probabilists tend to call functions random variables and use the notation \( x \), \( y \) and so on at the other end of the alphabet, helped to make nonprobabilists suspect that mathematical probability was hocus pocus rather than mathematics. And the fact that probabilists called some integrals “expectations” and used the letter \( E \) or \( M \) instead of integral signs strengthened the suspicion.

My total ignorance of the field made me look at probability without traditional blinders. I cannot give a mathematically satisfactory definition of nonmathematical probability. For that matter I cannot give a mathematically satisfactory definition of a nonmathematical chair. The very idea of treating real life as mathematics seems inappropriate to me. But a guiding principle in my work has been the idea that every nonmathematical probabilistic assertion suggests a mathematical counterpart which sharpens the formulation of the nonmathematical assertion and may also have independent mathematical interest. This principle first led me to the (rather trivial) mathematical theorem corresponding to the fact that applying a system of gambling, in which independent identically distributed plays to bet on are chosen without foreknowledge but otherwise arbitrarily, does not change the odds. Much later, the idea that a fair game remained fair under optional sampling led me to martingale theory ideas.

The University of Illinois

Snell: Your first, and last, regular teaching job was at the University of Illinois. How did you end up there and what was it like in the early days at Illinois?

Doob: After three years of fellowships I finally received a job offer, from the University of Illinois in Urbana — rank of Associate, that is, Instructor’s pay for Assistant Professor duties. I was charmed by the small-town atmosphere of Urbana as soon as I arrived and never wanted to leave, even though the atmosphere changed through the years. I had never done any teaching but found teaching calculus to freshmen to be fun, once I had found out how to teach with a minimum of paper grading and preparation. In those days I could go into a class, ask where we were and go on from there. This technique unfortunately became less practical as my arteries hardened.

At first the advanced courses I gave were the bread-and-butter courses in real and complex variables. I had never worked out for myself or read any systematic approach to probability and had no feeling for what would be an appropriate sequence of topics. There was, however, pressure to teach probability because Paul Halmos and Warren Ambrose chose me as thesis adviser. They were both good enough to be guided through outside reading, but there was actually not much reading that I felt was adequate. A decent course based on the measure theory taught in those days had to discuss measures on abstract spaces, Borel measurable functions and the Radon–Nikodym theorem. And when I finally was pushed into teaching probability it was necessary to learn first and then discuss in detail such elementary, but new to me, subjects as Bernoulli distributions and Stirling’s formula.

Then and later the most embarrassing probability class lecture was the first, in which I tried to give a satisfying account of what happens when one tosses a coin. (A famous statistician told me that he solves the difficulty by never mentioning the context.) One wants to talk about a limit of a frequency, but “limit” has no meaning unless an infinite sequence is involved, and an infinite sequence is not an empirical concept. I made vague and heavily hedged remarks such as that the ratio I would like to have limit l/2 “seems to tend to 1/2,” that the coin tosser “would be very much surprised if the ratio is not nearly 1/2 after a large number of tosses” and so on.

The students never seemed to be bothered by my vagueness. For that matter professionals who write about the subject are usually also unbothered, perhaps because they never seem to be tossing real coins in a real world under the influence of Newton’s laws, which somehow are not mentioned in the writing.

Writing the stochastic processes book

Snell: Few mathematical books have had the influence that Stochastic Processes [1] has had. How did you come to write this book?

Doob: In 1942, Veblen recruited me among others to go to Washington to work for the navy, in mine warfare. The work needed knowledge of elementary physics, editorial experience and common sense. The last, I found, was the rarest of the three and made me reluctant to apply advanced mathematics to practical problems with imprecise data. The problems were fascinatingly different from my mathematical work but I was necessarily an outsider, and I was bored intellectually. What I needed was a mathematical project I could work on without a library. I wrote a pot boiler on the component processes of \( N \)-dimensional Gaussian Markov processes, and then Shewhart came to my rescue in 1945, when he invited me to write a book for the Wiley series in statistics. I decided to write a book on stochastic processes and that I would get Norbert Wiener to write a section on their application to electrical engineering. I knew nothing about such applications but had had several contacts with Wiener and knew that he was involved with electrical engineers at MIT. On the other hand I had a copy of his — classified “Confidential” (!) — 1942 monograph (Extrapolation Interpolation and Smoothing of Stationary Time Series with Engineering Applications) and was worried that it was so vague on the engineering applications. It cheered me slightly that he had a machine at MIT which purportedly was significant for antiaircraft gunnery. But when Feller and I visited him we found only a wonderful toy based on a moving spotlight controlled by a delayed action lever. Feller and I played with it for a few minutes and managed to put it out of commission.

I started the book in Washington, doing only topics I could handle at home without a library. In 1946, when I was back in Urbana, Wiener visited Urbana to help dedicate the new electrical engineering building. It turned out that his idea of contributing to my book was to walk up and down on my porch making general remarks on communication theory, remarks which presumably I was to work up. I had great respect for Wiener’s work in probability and now have even more for his fundamental work in potential theory, but I did not see any substance in his remarks and delicately persuaded him that what he was talking about was not quite suitable for my book. The only result of his temporary role was that I inserted a couple of chapters on prediction theory in the book. They are somewhat out of character with the rest of the book but I had put so much work into getting them into what I thought was reasonable form that I did not have the heart to omit them. I wanted to remove the mystery from a straightforward problem of least squares approximation, largely solved by Szegö in 1920 and jazzed up by the probabilistic interpretation.

I intended to minimize explicit measure theory in the book because many probabilists were complaining that measure theory was killing the charm of their subject without contributing anything new. My idea was to assume as known the standard operations on expectations and conditional expectations and not even use the nasty word “measure.” This idea got me into trouble. My circumlocutions soon became so obscure that the text became unreadable and I was forced to make the measure theory explicit. I joked in my introduction that the unreadability of my final version might give readers an idea of that of the first version, but like so many of my jokes it fell flat. I was grateful that at least J. W. T. Youngs noticed it, but I was less grateful that it apparently mystified the Russian translators of the book, who simply omitted it.

As it turned out, one of the main accomplishments of my book was to make probability theory mathematically respectable by establishing the key role of measure theory not just in the basic definitions but also in the further working out. More precisely it became clear, or should have become clear, that mathematical probability is simply a specialization of measure theory. I must admit, however, that, although every mathematician classifies measure theory as a part of analysis, many probabilists consider that a study of sample functions is “probability,” whereas a study of distributions of random variables is “analysis.” This distinction mystifies me. While writing my book I had an argument with Feller. He asserted that everyone said “variable” and I asserted that everyone said “chance variable.” We obviously had to use the same name in our books, so we decided the issue by a stochastic procedure. That is, we tossed for it and he won.

I wrote my Stochastic Processes book in the way I have always written mathematics. That is, I wrote with only a vague idea of what I was to cover. I had no idea I would sweat blood working up new inequalities for characteristic functions of random variables in order to make straightforward the derivation of the Lévy formula for the characteristic function of an infinitely divisible distribution. It was only a long time after I started that I decided it would be absurd to include convergence of sums of mutually independent random variables and the corresponding limits of averages (laws of large numbers) without also including the analogous results for convergence of sums of mutually orthogonal random variables and the corresponding limits of averages. And I had no idea ahead of time how the martingale discussion would develop.

After the book was published in 1953, I thought that the popularity of martingale theory was because of the catchy name “martingale,” just as everyone was intrigued by my proposal (which actually never came to anything, although financing was available) that the University of Illinois should sponsor a probability institute, to be called the “Probstitute.” Of course martingale theory had so many applications in and outside of probability that it had no need of the catchy name.

When the Stochastic Processes book came out I had the best possible proof that it actually was read carefully: a blizzard of letters arrived pointing out mistakes. My second book on probability and potential theory had no such reception.

Martingales

Snell: Your Stochastic Processes book established martingales as one of the small number of important types of stochastic processes. How did you get interested in martingales?

Doob: When I started to study probability one of my goals was to obtain mathematical statements and proofs of common probabilistic assertions which had not yet been properly formulated. One of the first theorems I proved in pursuing this program was a formulation of the fact that, in the context of independent plays with a common distribution, no system of betting in which the plays to bet on depend on the results of previous plays changes the odds. This result was one of the first to make a properly defined random time an essential feature of a mathematical discussion. Von Mises had postulated a version of this result in an attempt to put probability as applied to a sequence of independent trials on a rigorous mathematical basis. His version was suggestive but it was not mathematics.

I was given Jean Ville’s 1939 book to review, in which he did not formally define a martingale but proved the maximum inequality for a martingale sequence and used it to prove the strong law of large numbers for Bernoulli trials. His work intrigued me and, once I had formulated the martingale definition, the fact that the definition suggests a version of the idea of a fair game suggested the introduction of what are now called optional times and the derivation of conditions for which sampling of a martingale sequence at an increasing sequence of optional times preserves the martingale property. This investigation in turn led to the idea of a measurable space filtered by an increasing sequence of sigma algebras of measurable sets, successive pasts of a process, which has proved very fruitful. I did not appreciate the power of martingale theory until I worked on it in the course of writing my 1953 book, but the vague idea that if one knows more and more about something one has a monotone context in some sense, and thus there ought to be convergence, suggests that under appropriate analytic conditions a martingale sequence should converge. I did not realize when I started that, long before I studied martingale sequences, they had been studied by Serge Bernstein, Lévy and Kolmogorov. The martingale definition led at once to the idea of sub- and supermartingales, and it was clear that these were the appropriate names but, as I remarked in my 1984 book [2], the name “supermartingale” was spoiled for me by the fact that every evening the exploits of “Superman” were played on the radio by one of my children. If I had been doing my work at the university rather than at home, I am sure I would not have used the ridiculous names semi- and lower semimartingales for sub- and supermartingales in my 1953 book. Perhaps I should have noted that one reason for the success of that book is the prestigious-sounding title, a translation of a name in a German Khintchine paper.

Research and publication

Snell: Since I was a student when you were writing your Stochastic Processes book, I got a preview.

I remember two things that amazed me. One was that you typed all seven versions (pick-punch) and another is that it did not have a lot of examples.

Doob: My inclination has always been to look for general theories and to avoid computation. A discussion I once had with Feller in a New York subway illustrates this attitude and its limitations. We were discussing the Markov property and I remarked that the Chapman–Kolmogorov equation did not make a process Markovian. This statement satisfied me, but not Feller, who liked computation and examples as well as theory. It was characteristic of our attitudes that at first he did not believe me but then went to the trouble of constructing a simple example to prove my assertion.

Feller was the first mathematical probabilist I had ever met and, meeting him at a Dartmouth meeting of the AMS around 1940, I felt like Livingston when Stanley found him in Africa. I envied the Russian probability group, but Kolmogorov, who included statisticians among the probabilists, told me around that time how he envied the fact that the U.S. had so many probabilists!

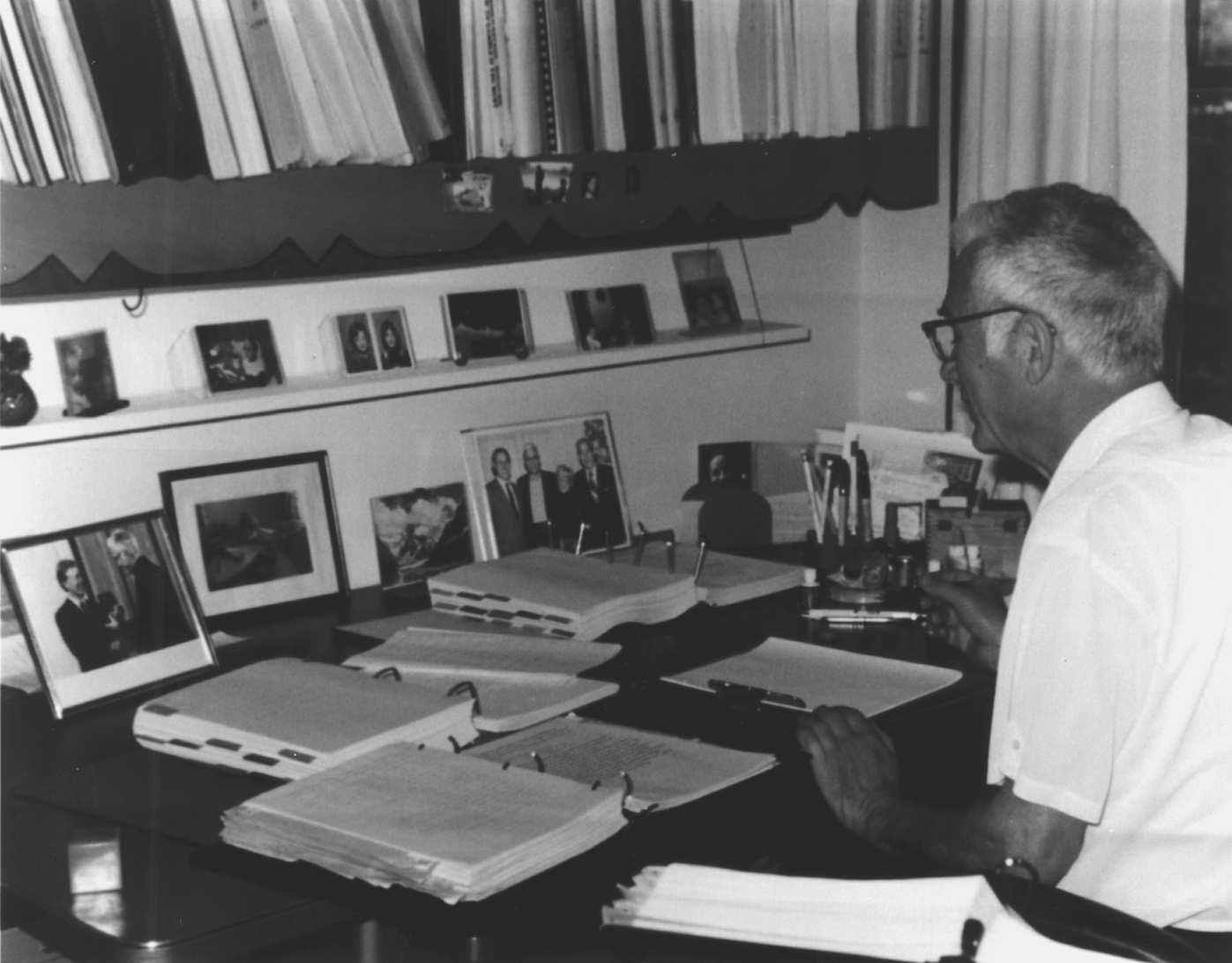

I corresponded with many mathematicians but never had detailed interplay with any but Kai Lai Chung and P.-A. Meyer in probability and Brelot in potential theory. My instincts were to work alone and even to collect enough books and reprints so that I could do all my work at home. My memory was so bad that I had difficulty discussing even my own results with other mathematicians.

My system of writing mathematics, whether a research paper or a book, was to write material longhand, with many erasures, with only a vague idea of what would be included. I would see where the math led me just as some novelists are said to let the characters in their books develop on their own, and I would get so tired of the subject after working on it for a while that I would start typing the material before it had assumed final form. Thus in writing even a short paper I would start typing the beginning before I knew what results I would get at the end. Originally I wrote in ink, applying ink eradicator as needed. Feller visited me once and told me he used pencil. We argued the issue, but the next time we met we found that each had convinced the other: he had switched to ink and I to pencil.

My system, complicated by my inaccurate typing, led to retyping material over and over, and for some time I had an electric drill on my desk, provided with an eraser bit which I used to erase typing. I rarely used the system of brushing white fluid over a typed error because I was not patient enough to let the fluid dry before retyping. Long after my first book was done I discovered the tape rolls which cover lines of type. As I typed and retyped my work it became so repugnant to me that I had more and more difficulty even to look at it to check it. This fact accounts for many slips that a careful reading would have discovered. I commonly used a stochastic system of checking, picking a page and then a place on the page at random and reading a few sentences, in order to avoid reading it in context and thereby to avoid reading what was in my mind rather than what I had written. At first I would catch something at almost every trial, and I would continue until several trials would yield nothing. I have tried this system on other authors, betting, for example, that I would find something to correct on a randomly chosen printed page of text, and nonmathematicians suffering under the delusion that mathematics is errorless would be surprised at how many bets I have won.

To my mind, the most boring part of mathematical research is the work involved in making historical remarks, and I always deferred that work to the last moment. That explains why my first two books have history in appendices, and the third has practically no references whatever. After writing my Stochastic Processes I swore, “Never again! No more books!” Many years later, however, it seemed to me that the literature on classical potential theory and its probability connections was so scattered that something should be done about it, and that accounts for my potential theory book [2], after the writing of which I renewed my earlier oath on book writing. But then after I retired I discovered computers, and — ever a gadgeteer — I was charmed by them but could find no excuse to buy one. When I discussed this problem with a retired physicist he told me he had the contents of his refrigerator listed in his computer, and of course this meant he had daily changes. This was not much encouragement, but finally I had an inspiration: if I could bring myself to write a third book, that would justify buying a computer. I had donated all my books and reprints to the Department of Statistics, so any book I wrote, working at home as usual, would have to be on a subject I knew very well that would not require visiting the campus to consult the library. I had taught measure theory several times and had my own ideas on how to develop the subject, ideas I had not used in my teaching, so I decided to make a compromise with my solemn oaths and write up measure theory for my own amusement, not for publication. In particular I wanted to integrate probabilistic ideas into standard measure theory, and I wanted to make systematic use of metric space ideas in measure theory. So I bought the simplest Macintosh computer and the word processor Microsoft Word. After frequent consultations and frantic telephone appeals for help to Halmos in California and Snell in New Hampshire, I had learned all the Word tricks I needed, including the rather mysterious system at the back of the Word manual for writing mathematical expressions, but the news of my writing had got out, and I was invited to publish it. This meant that I had to go over my material with more care than I had intended, and sure enough I found many serious errors, but the book was finally done and published. I am sure that Measure Theory [3] is my last book, if for no other reason than that at 87 I am now incapable of concentrated work and no longer think seriously about mathematics. Long ago, after hearing lectures by mathematicians who should have quit while they were ahead, I resolved to give no more lectures. The present maundering illustrates how right I was and that in addition I should have resolved to do no more writing.

Potential theory

Snell: Your mention of your potential theory book [2] reminds me that you went full circle from complex variable theory to probability and then back to complex variable theory. How did you become interested in potential theory?

Doob: As I remarked earlier, my first contact with rigorous analysis was a complex variable theory course taught by Osgood, using his Funktionentheorie. It is a sign of the backwardness of that theory that for many years \( f \) denoted a function outside the theory but \( f(z) \) denoted a function of a complex variable. Also I was taught that \( f \) had a derivative at \( w \) if the usual difference quotient had a limit at \( w \) when \( z \) approached \( w \) no matter how \( z \) approached \( w \). That qualification was still considered necessary in 1927! At any rate I was charmed by the subject and liked the text. I still do.

Kakutani’s 1944 probabilistic treatment of the Dirichlet problem combined two of my interests, complex variable theory and probability, and I decided to try to develop their interrelations further. I soon found that functions having certain average properties, such as harmonic and subharmonic functions, would play a key role and that these average properties suggested the application of martingale theory.

When I was invited to speak at the 1955 Berkeley Symposium on Probability and Statistics and had nothing to say, I arrived a few days early in Berkeley with an open mind and a portable typewriter. I decided to fulfill my Symposium obligation by defining a form of what is now called axiomatic potential theory, generalizing harmonic, subharmonic and superharmonic functions into functions defined on an abstract space and satisfying average properties suggested by those satisfied by these functions. This postulational approach was related to earlier work by other researchers, whose work I did not know at the time, but had not been linked to probability. Axiomatic potential theory has had an enormous expansion since those days. I soon found out that I had better learn more about classical potential theory and studied the fundamental work of Brelot, Cartan and Deny. My habit of taking definitions seriously suggested that Cartan’s fine topology should be applied in detail, and I developed it further and used it in studying limits of functions at the boundaries of their domains of definition. I thought then and still think that the fine topology should have applications in complex variable theory besides the application to the Fatou boundary limit theorem.

Of course I knew that Lebesgue’s theorem on the derivation of a measure on the line relative to Lebesgue measure had been generalized to derivation of any Borel measure on the line relative to a second one. This led me to wonder why the Fatou boundary limit theorem of a positive harmonic function, a theorem based on the derivation of a measure with respect to Lebesgue measure on the bounding circle, should not be generalized to cover the ratio of two positive harmonic functions, and I proved this generalization. I had already noted that the quotient of a positive superharmonic function divided by a positive harmonic function satisfied an average inequality like that of a superharmonic function, with an averaging measure depending on the denominator function. The corresponding ideas in probability theory led to quotients of positive martingales and to what are now called \( h \)-path processes in Markov process theory.

Hunt’s great papers on the potential theory generated by Markov transition functions revolutionized potential theory. He and I had an amusing interplay. I thought that his papers were difficult to read and decided to make them understandable to a wider audience, including me, by applying his approach in a simple context, potential theory on a countable set, based on a Markov transition function (a matrix in this context). He then trumped my paper in a paper explaining and going beyond mine. The sequence stopped there.

Hunt’s approach to potential theory had the unfortunate effect that many mathematicians thought of potential theory as a subchapter of probability theory and that potential theoretic notions are best defined probabilistically. When I wrote my potential theory book I tried to counteract this approach by dealing with classical potential theory first and probability — mostly martingale theory — in later chapters. The result was that even I was surprised to find that classical potential theory and martingale theory were so linked that what at first sight were purely probabilistic notions, such as the martingale crossing inequalities, were counterparts of nonprobabilistic potential theory, and that proofs in the latter theory gave proofs in the former by the simple device of interpreting, for example, \( h \) as a harmonic function in the one study and as a martingale in the other. The reduction operation on \( h \) is valid in both contexts and is a key link between them. I feel there must be a theory of which both theories are special cases but have had no success in devising one.

I was in close contact with Brelot in my potential theory work and learned much from him. When he told me he would like to write a book on modern potential theory but could not because he did not know the necessary probability theory, I was confident that he would not want to do the boring work of writing such a book and I thought it would be safe to tease him by offering to write the probability part of his book if he wrote the nonprobability part. My psychological analysis was right about him but defective about me, since my own book covered both parts.

The Illinois hike

Snell: While you have retired from the Mathematics Department at Illinois, you have not retired from the Illinois Saturday Hikes. We should close with some remarks about this institution.

Doob: The Saturday Hike was started in 1909 by a classics professor. Each Saturday the group drives to woods along a river. For many years some of the hikers hiked along the river and the others found an open area and played a primitive version of softball baseball, but as the years progressed the numbers dwindled and finally there were too few for baseball. On hot summer days there is swimming in a river or pond. A “sitting log” as hiker base is found in the woods. In the evening a fire is built near the log, as large as needed for cooking and warmth, food is cooked and the problems of the university, Champaign-Urbana and the world are solved. Disagreement on a fact is settled by a Pie for the Hikers bet; the loser brings a pie after the fact has been researched.

The hikers stand around the fire or sit on the sitting log. On cold winter days the fires are large, and newspaper is used over sitter knees to protect them from the heat. The tradition is that the fire should be placed to make the smoke go into sitter faces. Snow or light rain is mostly dissipated by the fire; if there is heavy rain, the fire is built under a nonporous bridge.

The hike is characterized by glorious irresponsibility in action and conversation and by heavy eating. “Hikers delight” is a renowned specialty: onions and hot peppers are fried in bacon fat; when the onions are done, the fat is poured into the fire, cheese is added and the frying continues until the cheese has melted. “We played softball in cow-pastures, fried our steak, stood on the fire and rocked the night with corny song” (Richmond Lattimore in the New Republic, November 13, 1961, in honor of the Saturday Hike founder; the singing stopped in the 1940s, when the singing leaders died).

I entered the group in 1939 and went regularly every Saturday. At that time as many as 30 came out, but now there are usually at most 10. The Saturday Hike is a treasured tradition and members drive to Urbana for it from as far away as Purdue, 90 miles away.

Snell: More impressive to me is your going on those winter hikes at age 87. I’ll see you next year at my annual hike visit, but I think I’ll make it November this time rather than January!