by Daniel W. Stroock

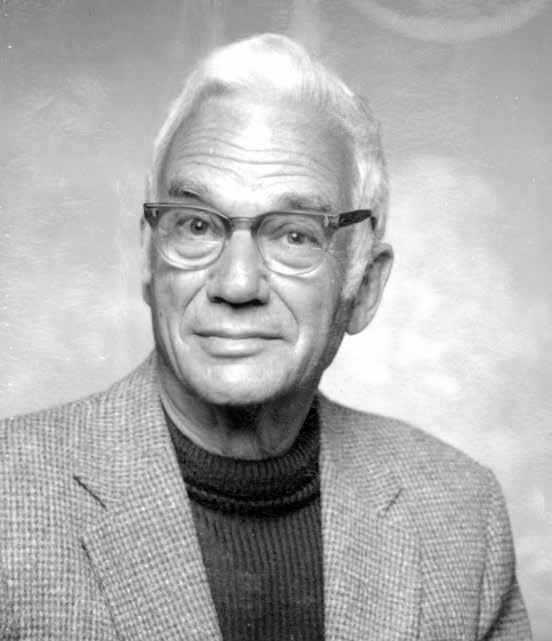

Joseph Leo Doob was born in Cincinnati, Ohio, and raised in New York City. For much of his career, he was the leading American-born probabilist, a status reflected by his election as president of the Institute of Statistics in 1950 and as president of the American Mathematical Society in 1963. In addition, he was a member of the American Academy of Arts and Sciences, the National Academy of Sciences, and the French Academy of Science; and a fellow of the Royal Statistical Society. He was a recipient of the Steele Prize from the American Mathematics Society and the National Medal of Science from President Jimmy Carter.

Despite his many honors, Doob was a sincerely modest man who shunned adulation and took as much pride in his 25-year appointment as Commissar of the Urbana-Champaign Saturday Hike as he did in his presidencies of learned societies. His modesty did not spring from lack of confidence. He entered Harvard University as a freshman at age 16 and left with a Ph.D. six years later. Although the Harvard mathematics faculty had several luminaries, Doob was never cowed by them. He sat in on a course that G. D. Birkhoff gave on his mathematical theory of aesthetics and dropped out when he decided that Birkhoff’s formulation was not sufficiently rigorous. A large part of what Doob learned in his two years as a graduate student he absorbed while typing the manuscript of Marshall Stone’s famous treatise on linear operators.

Initially, Doob thought he would write his thesis under Stone but ended up writing it under J. L. Walsh when Stone said that he did not have an appropriate new problem to suggest. Doob’s earliest work was thus focused on refined questions, of the Fatou type, about the boundary behavior of analytic functions.

A freshly minted mathematics Ph.D. faced a tough job market in 1932, but, with the backing of Birkhoff and Stone, Doob won a two-year National Research Council Fellowship that enabled him move to New York, where his wife was in medical school.

He spent two years at Columbia University, supposedly working with J. F. Ritt but in fact working more or less on his own. At the end of his NRC fellowship, the job prospects for young mathematicians had not improved and showed no signs of doing so any time soon. Following the advice of B. O. Koopman, who told him that his chances of getting a job would be better if he applied for one in statistics, Doob accepted a Carnegie Corporation grant that Harold Hotelling could get for him in Columbia’s statistics department. Thus it was cold financial necessity that deflected the trajectory of Doob’s mathematical career. The following year, he applied for and obtained one of the three or four jobs available then in mathematics, a position to teach statistics at the University of Illinois.

Until A. N. Kolmogorov provided it with a mathematical foundation in 1933, probability theory did not exist as a mathematical subject. Dating back to the 18th century, many mathematicians, including D. Bernoulli, P.-S. Laplace, P. de Fermat, B. Pascal, and C. Huygens had made interesting calculations, but exactly what they were calculating was not, from a mathematical standpoint, well defined. Similarly, although C. F. Gauss himself had studied what he called the “theory of errors” and F. Galton had invoked Gauss’s ideas to lend scientific credence to his ideas about eugenics, the relationship between statistics and mathematics was even less clear. One can only imagine the reaction of someone like Doob, who found Birkhoff’s theory of aesthetics wanting in mathematical rigor, to these fields.

Faced with the challenge of not only learning statistics but also transforming it into a field that met his high standards, Doob set to work. Kolmogorov’s model of probability theory is based on Lebesgue’s theory of integration, and Doob was well versed in the intricacies of Lebesgue’s theory. One of Doob’s first breakthroughs was a theorem about stochastic processes. As long as one is dealing with a countable number of random variables, most questions that one can ask about them are answerable, at least in theory. However, the same is not true of an uncountable number of random variables. Kolmogorov had devised a ubiquitous procedure for constructing uncountable families of random variables, but his construction had a serious drawback. Namely, the only questions about the random variables constructed by Kolmogorov that were measurable were questions that could be formulated in terms of a countable subset of the random variables. Thus, for example, if one used Kolmogorov’s procedure to construct Brownian motion, then one ended up with a family of random variables that were discontinuous with inner measure 1 but continuous with outer measure 1. What Doob showed was that there is a canonical way to modify Kolmogorov’s construction so that questions like those of continuity became measurable; and, because this modification reduced questions about uncountably many random variables to questions about countably many ones, he called it the “separable” version. Although Kolmogorov’s construction is no longer the method of choice, and therefore Doob’s result is seldom used today, at the time even Kolmogorov acknowledged it as a substantive contribution.

Doob’s renown did not rest on his separability theorem. Instead, he was best known for his systematic development and application of what he called “martingales”1 — a class of stochastic processes of either real- or complex-valued random variables parameterized by a linearly ordered set, usually either the non-negative integers or real numbers, with the property that increments are orthogonal to any measurable function, not just linear ones, of the earlier random variables. Thus, partial sums of mutually independent random variables are martingales, but partial sums of trigonometric series are not.

Martingales arise in a remarkable number of contexts, both outside and inside of probability theory. For example, J. Marcinkiewicz’s generalization of Lebesgue’s differentiation theorem can be seen as an early instance of what came to be known as Doob’s martingale convergence theorem, even though Marcinkiewicz’s argument was devoid of probability reasoning. In a probabilistic setting, martingales had also appeared in the work of S. Bernstein, Kolmogorov, and P. Lévy. But it was Doob who first saw just how ubiquitous a concept they are, and it was the ideas he introduced that propelled martingales into the prominence they have enjoyed ever since. Of key importance were his convergence and stopping time theorems.

Strictly speaking, the convergence theorem was not an entirely new result, as both B. Jessen and Lévy had proved theorems from which it followed rather easily. However, Doob’s proof was far more revealing and introduced ideas that were as valuable as the result itself. In particular, he introduced the notion of a “stopping time”, a random time with the property that one can tell whether it has occurred by any fixed time \( t \) if one observes the martingale up to \( \text{time } t \). Thus, the first time that a martingale exceeds a level is a stopping time, but the last time that it does is not a stopping time. Doob’s stopping time theorem shows that the martingale property is not lost if one stops the martingale at a stopping time. His proof of his convergence theorem makes ingenious use of that fact.

After proving these theorems, Doob turned his attention to applications. What he discovered was that martingales provided a bridge between partial differential equations and stochastic processes. The archetypal example of such a bridge is the observation that a harmonic function evaluated along a Brownian path is a continuous martingale. Once one knows this fact, S. Kakutani’s seminal result — the one that relates the capacitory potential of a set to the first time that a Brownian motion hits it — becomes an easy application of Doob’s stopping time theorem. In addition, the theorem lends itself to vast generalizations that lead eventually to the conclusion that, in a sense, there is an isomorphism between potential theory and the theory of Markov processes. One can easily imagine Doob’s joy when he used martingales to prove Fatou’s theorem about the way in which analytic functions in the disk approach their boundary values. Having been forced by the job market to abandon classical analysis for statistics, his revenge must have been sweet.

Two books that appeared in the early 1950s were responsible for making probability a standard part of the American mathematics curriculum. The first was William Feller’s Probability Theory and its Applications,2 which was a superb treatment that studiously avoids Lebesgue integration. Feller’s book set the standard for undergraduate probability texts, but it was Doob’s Stochastic Processes3 that made probability theory respectable in the mathematical research community. The style of Doob’s book was very different from that of Feller’s. Examples and applications were everywhere dense in Feller’s book, whereas Doob’s was a brilliant but daunting compilation of technical facts, unembellished by examples. Doob’s goal was to show that probability theory could stand with any other branch of mathematical analysis, and he succeeded.

The fact that most young probabilists have never read Doob’s book can be seen as a testament to its success. In the years since its publication, it spawned a myriad of books and articles that gave friendlier accounts of the same material. Nonetheless, if it were not for Doob’s treatment of K. Itô’s stochastic integration theory, R. Merton’s interpretation of the Black–Scholes model would not have been possible. Of course, whether that is a reason for celebrating or condemning Doob’s book is a question whose answer is outside the scope of mathematics.

Typical of Doob’s dry humor and laconic style was a statement contained in his Who’s Who entry to the effect that he had made probability more rigorous but less fun. Although he was right on both counts, he had no reason to regret what he had done. Mathematicians owe him a huge and enduring debt of gratitude.

[Editor’s note: The original memoir was published with a select bibliography of Joseph Doob’s works. A complete bibliography can be found on the Works page of this volume.]